Cosmos Cookbook

Overview

NVIDIA Cosmos™ is a platform of state-of-the-art generative world foundation models (WFMs), guardrails, and an accelerated data processing and curation pipeline. This cookbook serves as a practical guide to the Cosmos open models--offering step-by-step workflows, technical recipes, and concrete examples for building, adapting, and deploying WFMs. It helps developers reproduce successful Cosmos model deployments and customize them for their specific domains.

The Cosmos ecosystem supports the complete Physical AI development lifecycle--from inference using pre-trained models to custom post-training for domain adaptation. Inside, you'll find the following:

- Quick-start inference examples to get up and running fast.

- Advanced post-training workflows for domain-specific fine-tuning.

- Proven recipes for scalable, production-ready deployments.

Latest Updates

| Date | Recipe | Model |

|---|---|---|

| Mar 3 | GR00T-Dreams: Synthetic Trajectory Generation for Robot Learning | Cosmos Predict 2.5, Reason 2 |

| Feb 18 | Cosmos Policy: Fine-Tuning Video Models for Visuomotor Control and Planning Upgraded to Predict 2.5 |

Cosmos Predict 2.5 |

| Feb 18 | 3D AV Grounding Post-Training with Cosmos Reason 1 & 2 | Cosmos Reason 1 & 2 |

| Feb 4 | Worker Safety in a Classical Warehouse | Cosmos Reason 2 |

| Jan 30 | Prompt Guide | Cosmos Reason 2 |

| Jan 29 | Video Search and Summarization with Cosmos Reason | Cosmos Reason 2 |

| Jan 28 | Cosmos Policy: Fine-Tuning Video Models for Visuomotor Control and Planning | Cosmos Predict 2 |

| Jan 27 | Physical Plausibility Prediction with Cosmos Reason 2 | Cosmos Reason 2 |

| Jan 26 | Intelligent Transportation Post-Training with Cosmos Reason 2 | Cosmos Reason 2 |

Upcoming Activities

NVIDIA GTC 2026

Register for NVIDIA GTC happening March 16–19, 2026, and add the Cosmos sessions to your calendar. Don't miss the must-see keynote from CEO Jensen Huang at SAP Center on Monday, March 16 at 11:00 a.m. PT.

NVIDIA Cosmos Cookoff

Introducing the NVIDIA Cosmos Cookoff — a virtual, four-week physical AI challenge running January 29 – February 26 for robotics, AV, and vision AI builders.

Build with NVIDIA Cosmos Reason and Cosmos Cookbook recipes—from egocentric robot reasoning to physical plausibility checks and traffic-aware models for a chance to win $5,000, an NVIDIA DGX Spark, and more!

Sponsored by Nebius and Milestone.

Open Source Community Platform

The Cosmos Cookbook is an open-source resource where NVIDIA and the broader Physical AI community share practical workflows, proven techniques, and domain-specific adaptations.

📂 Repository: https://github.com/nvidia-cosmos/cosmos-cookbook

We welcome contributions—from new examples and workflow improvements to bug fixes and documentation updates. Together, we can evolve best practices and accelerate the adoption of Cosmos models across domains.

📊 Physical AI Datasets: Access curated datasets for autonomous vehicles, intelligent transportation systems, robotics, smart spaces, and warehouse environments on the NVIDIA Physical AI Collection on Hugging Face.

Case Study Recipes

The Cosmos Cookbook includes comprehensive use cases demonstrating real-world applications across the Cosmos platform.

Cosmos Predict

Future state prediction and generation

| Workflow | Description | Link |

|---|---|---|

| Inference | Text2Image synthetic data generation for intelligent transportation systems | ITS Synthetic Data Generation |

| Training | Fine-tuning Cosmos Predict 2 for robotic manipulation through latent frame injection for visuomotor control | Cosmos Policy |

| Training | Traffic anomaly generation with improved realism and prompt alignment | Traffic Anomaly Generation |

| Training | Synthetic trajectory data generation for humanoid robot learning | GR00T-Dreams |

| Training | LoRA post-training for sports video generation with improved player dynamics and rule coherence | Sports Video Generation |

Advanced Topics: Refer to Distilling Cosmos Predict 2.5 to learn how to distill the model into a 4-step student using DMD2.

Cosmos Transfer

Multi-control video generation and augmentation

| Workflow | Description | Link |

|---|---|---|

| Guide | Master precise control over video generation using Edge, Depth, Segmentation, and Vis modalities for structural preservation and semantic replacement | Control Modalities Guide |

| Inference | Style-guided video generation using image references with edge/depth/segmentation control | Style-Guided Generation |

| Inference | CARLA simulator-to-real augmentation for traffic anomaly scenarios | CARLA Sim2Real |

| Inference | Multi-control video editing for background replacement, lighting, and object transformation | Real-World Video Manipulation |

| Inference | Domain transfer pipeline for scarce biological datasets using edge-based control and FiftyOne | BioTrove Moths Augmentation |

| Inference | Weather augmentation pipeline for simulation data using multi-modal controls | Weather Augmentation |

| Inference | CG-to-real conversion for multi-view warehouse environments | Warehouse Simulation |

| Inference | Sim2Real data augmentation for robotics navigation tasks | X-Mobility Navigation |

| Inference | Synthetic manipulation motion generation for humanoid robots | GR00T-Mimic |

| Training | ControlNet post-training for spatially-conditioned multiview AV video generation with world scenario maps | Multiview AV Generation |

Cosmos Reason

Vision-language reasoning and quality control

| Workflow | Description | Link |

|---|---|---|

| Guide | Comprehensive prompting guide for Cosmos Reason 2 covering message structure, sampling parameters, and domain-specific patterns | Cosmos Reason 2 Prompt Guide |

| Inference | GPU-accelerated video analysis pipeline for large-scale video summarization, Q&A, and live stream alerts | Video Search and Summarization |

| Inference | Zero-shot safety compliance and hazard detection in industrial warehouse environments | Worker Safety in a Classical Warehouse |

| Inference | Egocentric social and physical reasoning for social robotics | Egocentric Social Reasoning |

| Training | 3D vehicle grounding in autonomous driving with Cosmos Reason 1 & 2 | 3D AV Grounding (Reason 1 & 2) |

| Training | Post-training Cosmos Reason 2 for AV video captioning and VQA with production data | AV Video Caption VQA (Reason 2) |

| Training | Post-training Cosmos Reason 2 for intelligent transportation scene understanding with WTS data | Intelligent Transportation (Reason 2) |

| Training | Physical plausibility prediction for video quality assessment with Cosmos Reason 2 | Physical Plausibility (Reason 2) |

| Training | Physical plausibility check for video quality assessment | Physical Plausibility (Reason 1) |

| Training | Spatial AI understanding for warehouse environments | Spatial AI Warehouse |

| Training | Intelligent transportation scene understanding and analysis | Intelligent Transportation (Reason 1) |

| Training | AV video captioning and visual question answering for autonomous vehicles | AV Video Caption VQA (Reason 1) |

| Training | Temporal localization for MimicGen robot learning data generation | Temporal Localization |

| Training | Wafer map anomaly classification with supervised fine-tuning on WM-811k | Wafer Map Classification |

Cosmos Curator

| Workflow | Description | Link |

|---|---|---|

| Curation | Curate video data for Cosmos Predict 2 post-training | Predict 2 Data Curation |

| Analysis | Advanced video clustering using Time Series K-Means on embedding trajectories | Video Clustering with Time Series K-Means |

End-to-End Workflows

| Workflow | Description | Link |

|---|---|---|

| GR00T-Dreams | End-to-end pipeline for synthetic robot trajectory generation: post-train Cosmos Predict 2.5 on GR1 data, generate trajectories, and use Cosmos Reason 2 as a video critic for rejection sampling | GR00T-Dreams |

| SDG Pipeline | Complete synthetic data generation pipeline for traffic scenarios using CARLA, Cosmos Transfer 2.5, and Cosmos Reason 1 | Smart City SDG |

Cosmos Models for Physical AI

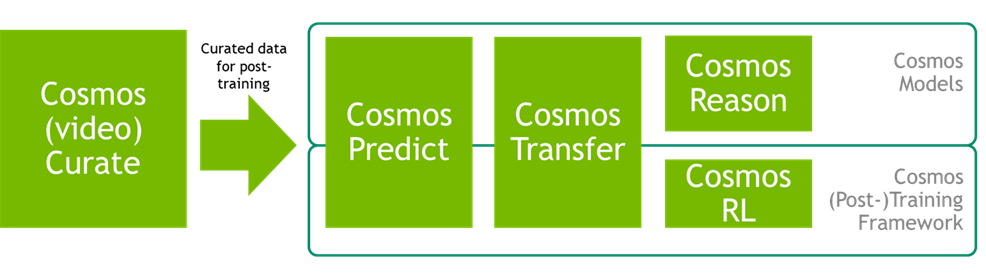

The Cosmos family of open models consists of five core repositories, each targeting specific capabilities in the AI development workflow:

Cosmos Curator - A GPU-accelerated video curation pipeline built on Ray. Supports multi-model analysis, content filtering, annotation, and deduplication for both inference and training data preparation.

Cosmos Predict - A diffusion transformer for future state prediction. Provides text-to-image and video-to-world generation capabilities, with specialized variants for robotics and simulation. Supports custom training for domain-specific prediction tasks.

Cosmos Transfer - A multi-control video generation system with ControlNet and MultiControlNet conditioning (including depth, segmentation, LiDAR, and HDMap). Includes 4K upscaling capabilities and supports training for custom control modalities and domain adaptation.

Cosmos Reason - A 7B vision-language model for physically grounded reasoning. Handles spatial/temporal understanding and chain-of-thought tasks, with fine-tuning support for embodied AI applications and domain-specific reasoning.

Cosmos RL - A distributed training framework supporting both supervised fine-tuning (SFT) and reinforcement learning approaches. Features elastic policy rollout, FP8/FP4 precision support, and optimization for large-scale VLM and LLM training.

All models include pre-trained checkpoints and support custom training for domain-specific adaptation. The diagram below illustrates component interactions across inference and training workflows.

ML/Gen AI Concepts

The cookbook is organized around key concepts spanning (controlled) inference and training use cases:

1. Prompt Guide - Learn effective prompting strategies for Cosmos models. This covers message structure, media ordering, sampling parameters, and domain-specific patterns to help you get the best results from Cosmos Reason and other vision-language models.

2. Control Modalities - Master precise control over video generation with Cosmos Transfer 2.5 using Edge, Depth, Segmentation, and Vis modalities. This covers structural preservation, semantic replacement, lighting consistency, and multi-control approaches for achieving high-fidelity, controllable video transformations.

3. Data Curation - Use Cosmos Curator to prepare your datasets with modular, scalable processing pipelines. This includes splitting, captioning, filtering, deduplication, task-specific sampling, and cloud-native or local execution.

4. Model Post-Training - Fine-tune foundation models using your curated data. This covers domain adaptation for Predict (2 and 2.5), Transfer (1 and 2.5), and Reason 1, setup for supervised fine-tuning, LoRA, or reinforcement learning, and use of Cosmos RL for large-scale distributed rollout.

5. Evaluation and Quality Control - Ensure your post-trained models are aligned and robust through metrics, visualization, and qualitative inspection. Leverage Cosmos Reason 1 as a quality filter (e.g. for synthetic data rejection sampling).

6. Model Distillation - Compress large foundation models into smaller, efficient variants while preserving performance. This includes knowledge distillation techniques for Cosmos models, teacher-student training setups, and deployment optimization for edge devices and resource-constrained environments.

Gallery

Visual examples of Cosmos Transfer results across Physical AI domains:

- Robotics Domain Adaptation - Sim-to-real transfer for robotic manipulation with varied materials, lighting, and environments.

- Autonomous Vehicle Domain Adaptation - Multi-control video generation for driving scenes across different weather, lighting, and time-of-day conditions.

Quick Start Paths

This cookbook provides flexible entry points for both inference and training workflows. Each section contains runnable scripts, technical recipes, and complete examples.

- Inference workflows: Getting Started for setup and immediate model deployment

- Cloud deployments: Cloud platforms for ready-to-launch cloud instances on Nebius, Brev, and more to come

- Physical AI datasets: NVIDIA Physical AI Collection on Hugging Face for curated datasets across domains

- Data processing: Data Processing & Analysis for content analysis workflows

- Training workflows: Model Training & Fine-tuning for domain adaptation

- Case study recipes: Case Study Recipes organized by application area